3 Technical Quality: Validity

3.1 Overall Validity, Including Validity Based on Content

As elaborated by Messick (1989), the validity argument involves a claim with evidence evaluated to make a judgment. Three essential components of assessment systems are necessary: (a) constructs (what to measure), (b) the assessment instruments and processes (approaches to measurement), and (c) use of the test results (for specific populations). Validation is a judgment call on the degree to which each of these components is clearly defined and adequately implemented.

Validity is a unitary concept with multifaceted processes of reasoning about a desired interpretation of test scores and subsequent uses of these test scores. In this process, answers for two important questions are addressed. Regardless of whether the students tested have disabilities, the questions are identical: (1) How valid is the interpretation of a student’s test score? and (2) How valid is it to use these scores in an accountability system? Validity evidence may be documented at both the item and total test levels. The Association et al. (2018) is used in documenting evidence on content coverage, response processes, internal structure, and relations to other variables. This document follows the essential data requirements of the federal government as needed in the peer review process. The critical elements highlighted in Section 4 in that document (with examples of acceptable evidence) include (a) academic content standards, (b) academic achievement standards, (c) a statewide assessment system, (d) reliability, (e) validity, and (f) other dimensions of technical quality.

In this technical report, data are presented to support the claim that Oregon’s AA-AAAS provides the state technically adequate student performance data to ascertain proficiency on grade level state content standards for students with significant cognitive disabilities - which is its defined purpose. The AA-AAAS are linked to grade level academic content, generate reliable outcomes at the test level, include all students, have a cogent internal structure, and fit within a network of relations within and across various dimensions of content related to and relevant for making proficiency decisions. Sample items that convey the design and sample content of ORExt items are provided in the ORExt Electronic Practice Tests.

The assessments are administered and scored in a standardized manner. Assessors who administer the ORExt are trained to provide the necessary level of support for appropriate test administration on an item-by-item basis. There are four levels of support outlined in training: full physical support, partial physical support, prompted support, and no support. Items were designed to document students’ skill and knowledge on grade level academic content standards, with the level of support provided designed not to interfere with the construct being measured. Only one test administration type is used for the ORExt, patterned after the former Scaffold version of the assessment. Assessors administer the prompt and if the student does not respond, the Assessor reads a directive statement designed to focus the student’s attention upon the test item and then repeats the prompt. If the student still does not respond, the Assessor repeats the prompt as needed and otherwise scores the item as incorrect and moves on to the next item. Training documentation is provided in the QT Training Video.

Given the content-related evidence that we present related to test development, alignment, training, administration, scoring, the reliability information reflected by adequate coefficients for tests, and, finally, the relation of tests across subject areas (providing criterion-related evidence), we conclude that the alternate assessment judged against alternate achievement standards allows valid inferences to be made on state accountability proficiency standards.

3.1A Alignment Between AA-AAAS and Academic Content Standards

The foundation of validity evidence from content coverage for the ORExt comes in the form of test specifications (see OR Extended Assessment-Item Development Info) and the ORExt Test Blueprint. Among other things, the Association et al. (2018) suggest specifications should “define the content of the test, the proposed test length, the item formats…”.

All items are linked to grade level standards and a prototype was developed using principles of universal design with traditional, content-referenced multiple-choice item writing techniques. The most important component in these initial steps addressed language complexity and access to students using both receptive, as well as expressive, communication. Additionally, both content breadth and depth were addressed. One test form for the ORExt was developed that utilizes a scaffold approach. This approach allows for students with very limited attention to access test content, while the supports are not utilized for students who do not need this support.

The ORExt tests were developed iteratively by developing items. The Item Writer Training conveys the item writer training materials, piloted, reviewed, and edited in successive drafts. Existing panels of veteran teachers were used who have worked with the Oregon Department of Education (ODE) in various advising roles on testing content in general and special education, using the same processes and criteria, as well as the introduction of newer teachers who are qualified as we proceed to remain relevant. Behavioral Research and Teaching (BRT) personnel conducted the internal reviews of content. After the internal development of prototype items, all reviews then involved Oregon content and special education experts with significant training and K-12 classroom experience.

The ORExt incorporates continuous improvement into its test design via field-testing in all content areas on an annual basis, with an average of 25% new items. These items are compared to operational items based on item functioning and test design factors, generating data used to replace items on an annual basis, incorporating the new items that fill a needed gap with regard to categorical concurrence, or provide for a wider range of functioning with regard to complexity levels: low - medium - high, comparable to Webb (2002).

BRT employed a multi-stage development process in 2014-15 to ensure that test items were linked to relevant content standards, were accessible for students with significant cognitive disabilities, and that any perceived item biases were eliminated. The item review process included 51 reviewers with an average of 22 years of experience in education. The ORExt assessments have been determined to demonstrate strong linkage to grade level academic content, overall. Full documentation of the initial 2014 linkage study and a new, independent alignment study conducted in spring, 2017 is provided in the Oregon Extended Assessment Alignment Study. Based on student performance from the 2016-2017 testing year, new and Grade 7 Math field test items were written in fall 2017.

The summary section of the independent alignment study report states that, “Oregon’s Extended Assessments (ORExt) in English Language Arts, Mathematics, and Science were evaluated in a low-complexity alignment study conducted in Spring of 2017. Averages of reviewer professional judgments over five separate evaluations were gathered, reviewed, and interpreted in the pages that follow. In the three evaluations that involved determining the relationship between standards and items, reviewers identified sufficient to strong relationships among assessment components in all grades and all subject areas. In the two evaluations involving Achievement Level Descriptors, reviewers identified thirty instances of sufficient to strong relationships out of thirty-four possible relationship opportunities resulting in an overall affirmed relationship with areas for refinements identified.”

Because the assessments demonstrate sufficient to strong linkage to Oregon’s general education content standards and descriptive statistics demonstrate that each content area assessment is functioning as intended, it is appropriate to deduce that these standards define the expectations that are being measured by the Oregon Extended assessments.

The Oregon Extended assessments yield scores that reflect the full range of achievement implied by Oregon’s alternate achievement standards. Evidence of this claim is found in the standard setting documentation, see ORExt Assessment Technical Report on Standard Setting. Standards were set for all subject areas on June 15-17, 2015. Standards included achievement level descriptors and cut scores, which define Oregon’s new alternate achievement standards (AAS). The State Board of Education officially adopted the AAS on June 25, 2015.

3.1B AA-AAAS Linkage to General Content Standards

Results of the analysis of the linkage of the new Essentialized Assessment Frameworks, (EAF), composed of Essentialized Standards (EsSt), to grade level CCSS in English language arts and mathematics and linked to ORSci and NGSS in science, are presented in Section 3.1A. The claim is that the EsSt are sufficiently linked to grade level standards, while the ORExt items are aligned to the EsSt. In addition to presenting linkage information between grade level content standards and the EsSt, the linkage study presents alignment information related to the items on the new ORExt in comparison to the EsSt. Extended assessments have been determined to link sufficiently to grade level academic content standards. Field test items are added each year based on item alignment to standards.

The Oregon Extended assessments link to grade level academic content, as reflected in the item development process. Oregon also had each operational item used on the Oregon Extended assessment evaluated for alignment as part of two comprehensive linkage studies, one performed in 2014 and an independent alignment study performed in 2017 (see Section 3.1A). The professional reviewers in an internal study in 2014 and an independent study in spring 2017 included both special and general education experts, with content knowledge and experience in addition to special education expertise.

According to the independent linkage study report, the spring 2017 review was conducted by expert reviewers with professional backgrounds in either Special Education (the population), Assessment, or in Oregon’s adopted content standards. Reviewers were assigned to review grade-level items relative to their experience and expertise. In all, 39 reviewers participated. Thirty-four (34) participated in all 5 evaluations: thirteen (13), for the English Language Arts review, fifteen (15) for the Mathematics review, and six (6) for the Science review. All participants were assigned to at least one specific content area as shown in Table 1. Note: Four individuals were assigned to two areas of review. The thirty-nine individuals who participated in the study had a robust legacy of experience in the field and in the state. Participants represented 25 unique school districts across the state representing both urban and rural perspectives. All 39 of the individuals participating in the study held current teaching licenses. Two individuals also held administrative licenses. Years of experience in their area ranged from 3 - 30 years of experience with an average of 17 years of experience. (Mode = 11 years, Median = 16 years). One individual indicated 50 years of experience in the field. Three of the 39 individuals held a Bachelor’s degree only. Thirty-six held a Bachelor’s degree and at least one Master’s degree. Two held a Bachelor’s degree, at least one Master’s degree, and a doctoral degree. Fourteen (36%) of the individuals identified as experts in a specific Content area and 25 (64%) of the individuals identified Special education as their primary area of expertise.

These skilled reviewers were trained by synchronous webinars on linkage/alignment, as well as item depth, breadth, and complexity and then completed their ratings online via BRT’s Distributed Item Review (DIR) website and on Excel spreadsheets shared with the researcher electronically, (see DIR Overview for a system overview)). Mock linkage ratings were conducted in order to address questions and ensure appropriate calibration. Reviewers rated each essentialized standard on a 3-point scale (0 = no link, 1= sufficient link, 2=strong link) as it related to the standard the test developers had defined for that essentialized standard. Items were evaluated, in turn, based upon their alignment to the essentialized standard on a 3-point scale (0 = insufficient alignment, 1 = sufficient alignment, 2 = strong alignment). When averaged across reviewers, 1.00-1.29 was considered in the low range, 1.30 - 1.69 was sufficient, and 1.70 - 2.0 was strong. Additional comment was requested for any essentialized standard or item whose linkage was rated 0.

Overall, the 2017 independent alignment study concluded that: “First, reviewers were asked to conduct an affirmational review of the rationale used by test developers to omit certain content standards.” This finding was used to infer that the final standards selected for inclusion or omission in Oregon’s Extended Assessment were chosen rationally and that the final scope of content standards can be considered justifiable for the population for the subject area.

Conclusion: This review, with a lowest average rate of .82 (on a scale of 1), permited the inference: the scope of the standards selected for translation to Essentialized Standards were rationally selected. None of the standards de-selected (for inaccessibility or for being covered elsewhere) were strongly identified for re- inclusion, nor were identified as a critical hole for this population of students.

Second, reviewers were asked to identify the strength of the link between the source standard and the Essentialized Standard. This finding was used to infer that the process undertaken to essentialize a given Source Standard did not fundamentally or critically alter the knowledge or skill set intended by the source standard for this population of students (further confirming that the content selected for the assessment was comparable).

Conclusion: This review, with a range of 1.5 - 1.9 (on a scale of 2) permited the inference: the Essentialized Standards were found to link sufficiently to the source standards on average beyond the “sufficient” average of 1.0.

Third, reviewers were asked to identify the strength of the alignment between the Essentialized Standards and the items and to review the items developed using the Essentialized Standards for bias, and accessibility. The finding from this review was used to infer that the items written for this grade and subject area (using these Essentialized Standards) were adequately linked to the Essentialized Standards, were free from bias, and were accessible to students with significant cognitive disabilities.

Conclusion: The alignment review (1.32 - 1.89), accessibility review (.67 - 1.0), and freedom from bias review (.65 - 1.0) all permited the inference that the test items indicated a relationship with the source standards, the test items were not overly biased towards or against any particular group of individuals, and the test items were written such that the content and intent could be accessed by students with the most significant cognitive disabilities. (**Note: this range was skewed by feedback from one reviewer –ELA-Grade 3 - whose comments were noted in this study. Removing that individual’s comments would result in a range of .90 - 1.0 accessibility range and .89 - 1.0 freedom from bias range respectively.)

Fourth, reviewers were asked to review the statements used to describe student achievement on the test (the Achievement Level Descriptors) and their alignment to the Essentialized Standards that the students were tested on. The findings from this review was used to infer that the skills and achievements described by the Achievement Level Descriptors for each subject and grade level are aligned with the content standard being measured.

Conclusion: The reviews ranging from .68* - 1.0 permited the inference that the descriptions made regarding student skillset were an accurate reflection of the standards from which the assessment was developed at all three levels evaluated. (*One outlier for ELA-Grade 4 provided a review of a .52 average).

Fifth, and finally, reviewers were asked to review the alignment of the Achievement Level Descriptors to the items. The finding from this review was used to infer that each item in the developed assessment(s) was appropriately aligned to its associated Achievement Level Descriptor (further confirming that decisions made using this test were aligned with the intent of the source standard).

Conclusion: Fourteen of the seventeen grade-level reviews resulted in an average reviewer range of .67 - 1.0 indicating an appropriate alignment between ALDs and the items as written. This review permited the inference that, overall, the Achievement Level Descriptors are accurate reflections of the items. In three instances (Mathematics-Grades 3 and 4, and ELA-Grade 8) the average alignment by reviewer was .5 (indicating that one of the two individuals in that category did not agree that the items and ALDs were aligned).”

3.2 Validity Based on Cognitive Processes

Evidence of content coverage is concerned with judgments about “the extent to which the content domain of a test represents the domain defined in the test specifications” Association et al. (2018). As a whole, the ORExt is comprised of sets of items that sample student performance on the intended domains. The expectation is that the items cover the full range of intended domains, with a sufficient number of items so that scores credibly represent student knowledge and skills in those areas. Without a sufficient number of items, the potential exists for a validity threat due to construct under-representation Messick (1989).

The ORExt assessment is built upon a variety of items that address a wide range of performance expectations rooted in the CCSS, NGSS, and ORSci content standards. The challenge built into the test design is based first upon the content within each standard in English language arts, mathematics, and science. That content is RDBC in a manner that is verified by Oregon general and special education teachers to develop assessment targets that are appropriate for students with the most significant cognitive disabilities. The ORExt assessments utilize universal design principles in order to include all students in the assessment process, while effectively challenging the higher performing students. For students who have very limited to no communication and are unable to access even the most accessible items on the ORExt, an Oregon Observational Rating Assessment (ORora) was first implemented in 2015-16. The ORora is completed by teachers and documents the student’s level of communication complexity (expressive and receptive), as well as level of independence in the domains of attention/joint attention and mathematics. A complete report of ORora results from 2021-22 is provided:

| Total ORExt N | ORora Subsample n (% of total) | |

|---|---|---|

| Grade 3 | 386 | 88 (22.8%) |

| Grade 4 | 394 | 81 (20.56%) |

| Grade 5 | 425 | 112 (26.35%) |

| Grade 6 | 436 | 107 (24.54%) |

| Grade 7 | 438 | 114 (26.03%) |

| Grade 8 | 405 | 112 (27.65%) |

| High School | 311 | 98 (31.51%) |

| Total | 2795 | 712 (25.47%) |

| Grade | Mean (SD) Score | Score Range |

|---|---|---|

| Grade 03 | 47.84 (15.41) | [21, 80] |

| Grade 04 | 47.54 (16.56) | [20, 80] |

| Grade 05 | 52.99 (15.99) | [20, 80] |

| Grade 06 | 52 (18.11) | [20, 80] |

| Grade 07 | 54.35 (16.9) | [20, 80] |

| Grade 08 | 53.95 (16.24) | [10, 80] |

| High School | 53.2 (16.75) | [17, 80] |

| Total Average | 51.97 (16.78) | [10, 80] |

| Grade | Mean (SD) Score | Score Range |

|---|---|---|

| Attn | ||

| Grade 03 | 11.9 (3.73) | [5, 20] |

| Grade 04 | 12.01 (4.05) | [5, 20] |

| Grade 05 | 12.89 (4.05) | [5, 20] |

| Grade 06 | 12.86 (4.39) | [5, 20] |

| Grade 07 | 13.74 (4.18) | [5, 20] |

| Grade 08 | 13.12 (3.94) | [5, 20] |

| High School | 13.49 (4.05) | [5, 20] |

| Exp | ||

| Grade 03 | 11.59 (4.6) | [5, 20] |

| Grade 04 | 11.47 (4.94) | [5, 20] |

| Grade 05 | 12.62 (5.01) | [5, 20] |

| Grade 06 | 12.64 (5.34) | [5, 20] |

| Grade 07 | 13.05 (5.12) | [5, 20] |

| Grade 08 | 12.97 (4.88) | [5, 20] |

| High School | 12.84 (5.03) | [5, 20] |

| Math | ||

| Grade 03 | 11.49 (4.19) | [5, 20] |

| Grade 04 | 11.54 (4.52) | [5, 20] |

| Grade 05 | 13.01 (4.26) | [5, 20] |

| Grade 06 | 12.97 (5.01) | [5, 20] |

| Grade 07 | 13.2 (4.64) | [5, 20] |

| Grade 08 | 13.09 (4.49) | [5, 20] |

| High School | 13.03 (4.71) | [5, 20] |

| Recp | ||

| Grade 03 | 12.86 (4.9) | [5, 20] |

| Grade 04 | 12.73 (4.78) | [5, 20] |

| Grade 05 | 14.47 (4.51) | [5, 20] |

| Grade 06 | 13.68 (4.86) | [5, 20] |

| Grade 07 | 14.5 (4.65) | [5, 20] |

| Grade 08 | 14.94 (4.36) | [5, 20] |

| High School | 13.98 (4.59) | [5, 20] |

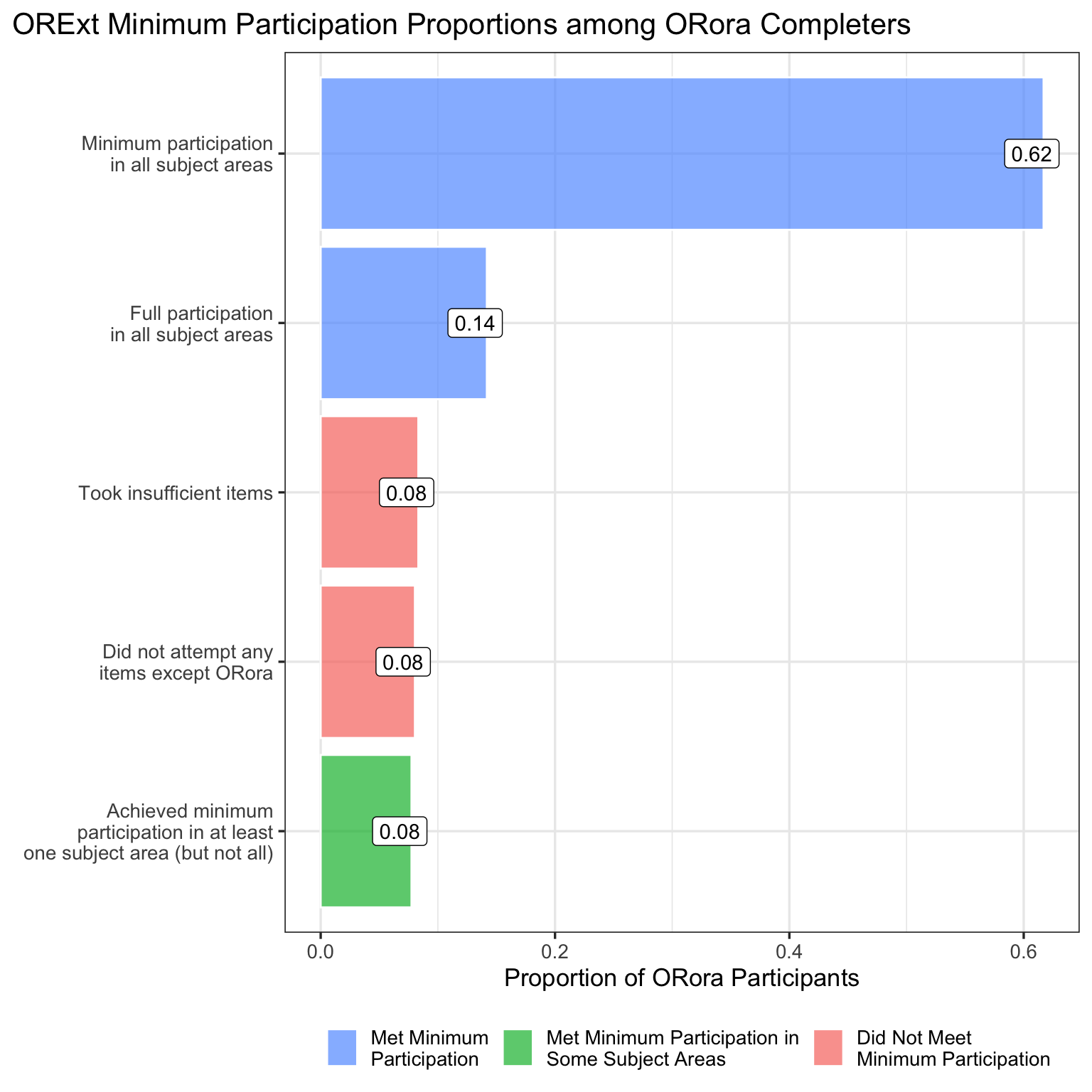

Below is a breakdown of minimum participation on the ORExt for those who took the ORora. Minimum participation is defined as having attempted at least 10 items. The vast majority (62%) of ORora participants achieved minimum participation on all subject areas (e.g., Math and ELA for grade 3; Math, ELA, and Science for grade 8) or full participation on all subject areas (14%); a small proportion (8%) met minimum participation in only 1 subject area but not in the other(s) (e.g., Math but not ELA for grade 4; Science and Math but not ELA for grade 11). A total of 16% of ORora participants did not meet minimum participation, with 8% of total being those who took insufficient items and 8% of total being those who did not attempt any items besides ORora at all.

Fifty-one reviewers analyzed all ORExt items for bias, sensitivity, accessibility to the student population, and alignment to the Essentialized Standards. A total of 21 reviewers were involved in the English language arts item reviews. An additional 21 reviewers were involved in the Mathematics item reviews. Science employed nine reviewers. Reviewers were organized into grade level teams of two special educators and one content specialist.

Substantive evidence that has been documented suggests that the ORExt items are tapping the intended cognitive processes and that the items are at the appropriate grade level through the linkage/alignment studies documented above, including reviews of linkage, content coverage, and depth of knowledge.

3.3 Validity Based on Internal Structure (Content and Function)

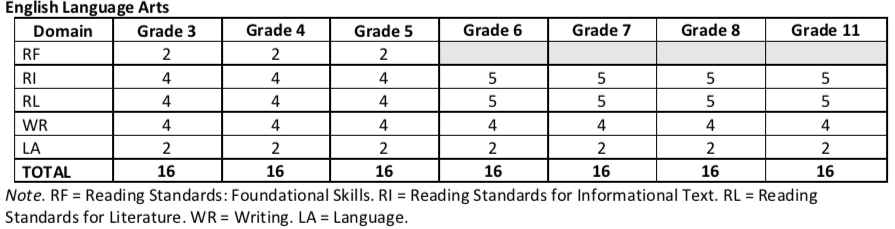

The Oregon Extended assessments reflect patterns of emphasis that are supported by Oregon educators as indicated by the following three tables that highlight the balance of standard representation by grade level for English language arts, mathematics, and science on the ORExt. The representation ratios can be calculated by dividing the standards by the total within each respective column. For example, in Grade 3 Reading, approximately 25% of the items are in the Reading Standards for Literature domain, as that domain has 4 written Essentialized Standards (EsSt) out of the total of 16 (4/16 = 25%).

The testblue prints below directly correspond to the number of ES written in each domain within the Essentialized Assessment Frameworks (EAF) spreadsheets. There are additional grade level standards addressed by the EsSt, as some EsSt link to multiple grade level content standards. However, the blueprints below reflect only the written EsSt and are thus an underrepresentation of the breadth of grade level content addressed by the ORExt.

The primary purpose of the ORExt assessment is to yield technically adequate performance data on grade level state content standards for students with significant cognitive disabilities in English language arts, mathematics, and science at the test level. All scoring and reporting structures mirror this design and have been shown to be reliable measures at the test level (see Section 4.1). The process of addressing any gaps or weaknesses in the system is accomplished via field-testing (see Section 3.1A).

3.3A Point Measure Correlations

Distributions of point measure correlations and outfit mean square statistics for operational items are provided below, by content area and grade. Point measure correlations display how the item scores correlate with the latent overall score; as such, point measure correlation is interpreted as a correlation coefficient.

All items included in the 2021-2022 operational assessment are represented. Point measure correlations ranged from 0.24 to 0.72 in ELA, 0.18 to 0.72 in Math, to 0.29 to 0.72 in Science.

| Grade | Mean | Standard Deviation | Median | Min | Max |

|---|---|---|---|---|---|

| ELA | |||||

| Grade 3 | 0.50 | 0.10 | 0.49 | 0.24 | 0.66 |

| Grade 4 | 0.54 | 0.09 | 0.54 | 0.27 | 0.67 |

| Grade 5 | 0.58 | 0.06 | 0.57 | 0.43 | 0.70 |

| Grade 6 | 0.57 | 0.07 | 0.58 | 0.42 | 0.68 |

| Grade 7 | 0.61 | 0.05 | 0.61 | 0.46 | 0.71 |

| Grade 8 | 0.61 | 0.04 | 0.61 | 0.52 | 0.68 |

| High School | 0.64 | 0.06 | 0.65 | 0.49 | 0.72 |

| Math | |||||

| Grade 3 | 0.48 | 0.11 | 0.51 | 0.24 | 0.63 |

| Grade 4 | 0.47 | 0.12 | 0.52 | 0.18 | 0.64 |

| Grade 5 | 0.43 | 0.09 | 0.42 | 0.24 | 0.58 |

| Grade 6 | 0.51 | 0.07 | 0.53 | 0.32 | 0.65 |

| Grade 7 | 0.47 | 0.11 | 0.50 | 0.21 | 0.67 |

| Grade 8 | 0.39 | 0.09 | 0.40 | 0.21 | 0.57 |

| High School | 0.46 | 0.09 | 0.46 | 0.20 | 0.61 |

| Science | |||||

| Grade 5 | 0.60 | 0.09 | 0.62 | 0.29 | 0.71 |

| Grade 8 | 0.62 | 0.07 | 0.65 | 0.37 | 0.70 |

| High School | 0.65 | 0.05 | 0.65 | 0.53 | 0.72 |

| Grade | Mean | Standard Deviation | Median | Min | Max |

|---|---|---|---|---|---|

| Reading | |||||

| Grade 3 | 0.50 | 0.08 | 0.50 | 0.27 | 0.60 |

| Grade 4 | 0.53 | 0.04 | 0.54 | 0.46 | 0.61 |

| Grade 5 | 0.56 | 0.05 | 0.57 | 0.44 | 0.65 |

| Grade 6 | 0.58 | 0.05 | 0.59 | 0.45 | 0.66 |

| Grade 7 | 0.61 | 0.04 | 0.62 | 0.52 | 0.68 |

| Grade 8 | 0.62 | 0.04 | 0.62 | 0.55 | 0.70 |

| High School | 0.64 | 0.05 | 0.65 | 0.51 | 0.71 |

| Writing | |||||

| Grade 3 | 0.59 | 0.09 | 0.58 | 0.46 | 0.73 |

| Grade 4 | 0.67 | 0.06 | 0.67 | 0.59 | 0.76 |

| Grade 5 | 0.68 | 0.09 | 0.70 | 0.55 | 0.79 |

| Grade 6 | 0.62 | 0.05 | 0.61 | 0.55 | 0.69 |

| Grade 7 | 0.68 | 0.08 | 0.68 | 0.50 | 0.76 |

| Grade 8 | 0.67 | 0.06 | 0.68 | 0.54 | 0.73 |

| High School | 0.70 | 0.05 | 0.72 | 0.61 | 0.77 |

3.3.0.1 Outfit Mean Square Distributions

Outfit mean square (OMS) values below 1.0 demonstrate that values are too predictable and perhaps redundant, while values above 1.0 indicate unpredictability. Another way to think about OMS is that values closer to 1.0 denote minimal distortion of the measurement system. Items above 2.0 are deemed insufficient for measurement purposes and flagged for replacement.

| Grade | Mean | Standard Deviation | Min | Max |

|---|---|---|---|---|

| ELA | ||||

| Grade 3 | 1.11 | 0.36 | 0.65 | 2.21 |

| Grade 4 | 1.06 | 0.32 | 0.54 | 1.88 |

| Grade 5 | 1.11 | 0.44 | 0.60 | 3.22 |

| Grade 6 | 0.92 | 0.19 | 0.55 | 1.43 |

| Grade 7 | 1.00 | 0.26 | 0.58 | 1.85 |

| Grade 8 | 0.95 | 0.25 | 0.57 | 1.73 |

| High School | 0.90 | 0.34 | 0.38 | 1.96 |

| Math | ||||

| Grade 3 | 1.08 | 0.30 | 0.72 | 2.10 |

| Grade 4 | 1.06 | 0.35 | 0.69 | 2.73 |

| Grade 5 | 1.13 | 0.25 | 0.82 | 1.80 |

| Grade 6 | 0.96 | 0.23 | 0.51 | 1.58 |

| Grade 7 | 0.96 | 0.21 | 0.61 | 1.55 |

| Grade 8 | 0.98 | 0.19 | 0.69 | 1.49 |

| High School | 0.92 | 0.16 | 0.64 | 1.37 |

| Science | ||||

| Grade 5 | 1.01 | 0.31 | 0.47 | 1.69 |

| Grade 8 | 0.90 | 0.28 | 0.54 | 1.59 |

| High School | 0.90 | 0.32 | 0.41 | 1.85 |

| Grade | Mean | Standard Deviation | Min | Max |

|---|---|---|---|---|

| Reading | ||||

| Grade 3 | 1.14 | 0.39 | 0.76 | 1.98 |

| Grade 4 | 1.15 | 0.27 | 0.83 | 1.90 |

| Grade 5 | 1.13 | 0.36 | 0.74 | 2.38 |

| Grade 6 | 0.96 | 0.20 | 0.58 | 1.41 |

| Grade 7 | 1.06 | 0.29 | 0.76 | 1.92 |

| Grade 8 | 1.00 | 0.26 | 0.69 | 1.74 |

| High School | 0.84 | 0.22 | 0.53 | 1.43 |

| Writing | ||||

| Grade 3 | 0.99 | 0.34 | 0.56 | 1.39 |

| Grade 4 | 0.84 | 0.41 | 0.40 | 1.70 |

| Grade 5 | 1.04 | 0.65 | 0.48 | 2.41 |

| Grade 6 | 0.90 | 0.19 | 0.53 | 1.21 |

| Grade 7 | 0.91 | 0.31 | 0.58 | 1.69 |

| Grade 8 | 0.88 | 0.33 | 0.48 | 1.52 |

| High School | 0.99 | 0.81 | 0.35 | 3.51 |

While most OMS values in ELA were between 0.5 and 1.5, 11 items across 4 grades (Grade 5, High School, Grade 3, Grade 4) and 4 contents (Writing, Reading, Math, ELA) were above 2. The exact OMS values above 2 can be seen in the table below, arranged by test and grade.

| Grade | Outfit |

|---|---|

| Writing | |

| Grade 5 | 2.41 |

| High School | 3.51 |

| High School | 2.14 |

| High School | 3.51 |

| High School | 2.14 |

| Reading | |

| Grade 5 | 2.38 |

| Math | |

| Grade 3 | 2.10 |

| Grade 4 | 2.73 |

| ELA | |

| Grade 3 | 2.15 |

| Grade 3 | 2.21 |

| Grade 5 | 3.22 |

3.3B Annual Measureable Objectives Frequencies & Percentages

Annual Measurable Objective (AMO) calculations were conducted based upon student performance on the ORExt tied to the vertical scale using Rasch modeling. Overall results are largely consistent with 2016-17, with approximately 50% of students with significant cognitive disabilities achieving proficiency across grades and content areas.

| Content and Grade | AMO 1 (Does Not Yet Meet) | AMO 2 (Nearly Meets) | AMO 3 (Meets) | AMO 4 (Exceeds) |

|---|---|---|---|---|

| ELA | ||||

| Grade 3 | 77 (20%) | 192 (51%) | 97 (26%) | 13 (3%) |

| Grade 4 | 121 (31%) | 125 (32%) | 108 (28%) | 37 (9%) |

| Grade 5 | 134 (32%) | 165 (40%) | 64 (15%) | 54 (13%) |

| Grade 6 | 113 (26%) | 162 (38%) | 112 (26%) | 40 (9%) |

| Grade 7 | 153 (36%) | 114 (27%) | 89 (21%) | 72 (17%) |

| Grade 8 | 164 (41%) | 99 (25%) | 69 (17%) | 64 (16%) |

| High School | 81 (27%) | 96 (32%) | 32 (11%) | 87 (29%) |

| Math | ||||

| Grade 3 | 188 (50%) | 95 (25%) | 89 (24%) | 3 (1%) |

| Grade 4 | 127 (33%) | 165 (43%) | 83 (22%) | 10 (3%) |

| Grade 5 | 121 (29%) | 184 (45%) | 97 (23%) | 11 (3%) |

| Grade 6 | 232 (54%) | 53 (12%) | 106 (25%) | 35 (8%) |

| Grade 7 | 231 (55%) | 21 (5%) | 149 (35%) | 21 (5%) |

| Grade 8 | 171 (45%) | 80 (21%) | 125 (33%) | 6 (2%) |

| High School | 169 (57%) | 50 (17%) | 70 (24%) | 7 (2%) |

| Science | ||||

| Grade 5 | 168 (41%) | 81 (20%) | 98 (24%) | 60 (15%) |

| Grade 8 | 161 (42%) | 63 (16%) | 79 (20%) | 84 (22%) |

| High School | 81 (28%) | 55 (19%) | 92 (32%) | 59 (21%) |

Across all years, the most common AMOs were AMO 1 (2 of 7 grades) and AMO 2 (5 of 7 grades) for ELA, AMO 1 (5 of 7 grades) and AMO 2 (2 of 7 grades) for math, and AMO 1 (2 of 3 grades) and AMO 3 (1 of 3 grades) for science.

Across subjects there are often few students in AMO 4 compared to the other 3. Considering this is the highest AMO, this is unsurprising; however, ELA and science have much higher rates of AMO 4 than math for most grades.

In some cases, a very small range of scaled scores exist because of the small range of observed scores. The smallest is Math grade 7, which only exists between scaled scores 207 and 209. Math grades 6 and 8 are also very small in terms of scaled scores, each existing between 4 scaled score points.

For comparison, the smallest AMO range for other contents areas is 7, which is high school ELA, followed by science at 10. In these cases, error can make a greater difference; theoretically, this could lead to lower test-retest consistency. This may be why there are higher percentages in AMO 3 for math in grades 7 and 8, compared to other years of math.

One to two more low-complexity items to relevant mathematic tests may help address this concern, as well.

| Content and Grade | AMO 1 (Does Not Yet Meet) | AMO 2 (Nearly Meets) | AMO 3 (Meets) | AMO 4 (Exceeds) |

|---|---|---|---|---|

| Reading | ||||

| Grade 3 | 73 (19%) | 213 (56%) | 75 (20%) | 18 (5%) |

| Grade 4 | 112 (29%) | 118 (30%) | 120 (31%) | 41 (10%) |

| Grade 5 | 124 (30%) | 160 (38%) | 69 (17%) | 64 (15%) |

| Grade 6 | 122 (29%) | 158 (37%) | 88 (21%) | 59 (14%) |

| Grade 7 | 130 (30%) | 130 (30%) | 106 (25%) | 62 (14%) |

| Grade 8 | 164 (41%) | 94 (24%) | 66 (17%) | 72 (18%) |

| High School | 81 (27%) | 107 (36%) | 30 (10%) | 77 (26%) |

| Writing | ||||

| Grade 3 | 104 (27%) | 166 (44%) | 76 (20%) | 33 (9%) |

| Grade 4 | 163 (42%) | 99 (25%) | 73 (19%) | 56 (14%) |

| Grade 5 | 154 (37%) | 153 (37%) | 33 (8%) | 77 (18%) |

| Grade 6 | 133 (31%) | 133 (31%) | 93 (22%) | 68 (16%) |

| Grade 7 | 179 (42%) | 97 (23%) | 112 (26%) | 40 (9%) |

| Grade 8 | 173 (44%) | 104 (26%) | 35 (9%) | 84 (21%) |

| High School | 84 (28%) | 96 (32%) | 20 (7%) | 96 (32%) |

For subscores, the most common AMOs across years were AMO 1 (5 of 7 grades) and AMO 2 (3 of 7 grades) and AMO 4 (1 of 7 grades) for writing, AMO 1 (2 of 7 grades) and AMO 2 (5 of 7 grades) and AMO 3 (1 of 7 grades) for reading.

These subscores—compared to math, science, and overall ELA—display broader coverage of ELA categories across grades, on average. Compared to other grades, grades 7 and 8 writing have relatively higher AMO 1 groups. For these grades, a better balance may be seen if existing difficult items are replaced with an easier ones.

3.4 Validity Based on Relations to Other Variables

Perhaps the best model for understanding criterion-related evidence comes from Campbell and Fiske (1959), in their description of the multi-trait, multi-method analysis [translate the term ‘trait’ to mean ‘skill’]. In this process (several) different traits are measured using (several) different methods to provide a correlation matrix that should reflect specific patterns supportive of the claim being made (that is, provide positive validation evidence). Sometimes, these various measures are of the same or similar skills, abilities, or traits, and other times they are of different skills, abilities, or traits. Data is presented that quite consistently reflects higher relations among items within an academic subject than between academic subjects. Data is also present which performance on items is totaled within categories of disability, expecting relations that would reflect appropriate differences Tindal et al. (2003).

3.4A Convergent and Divergent Validity Documentation

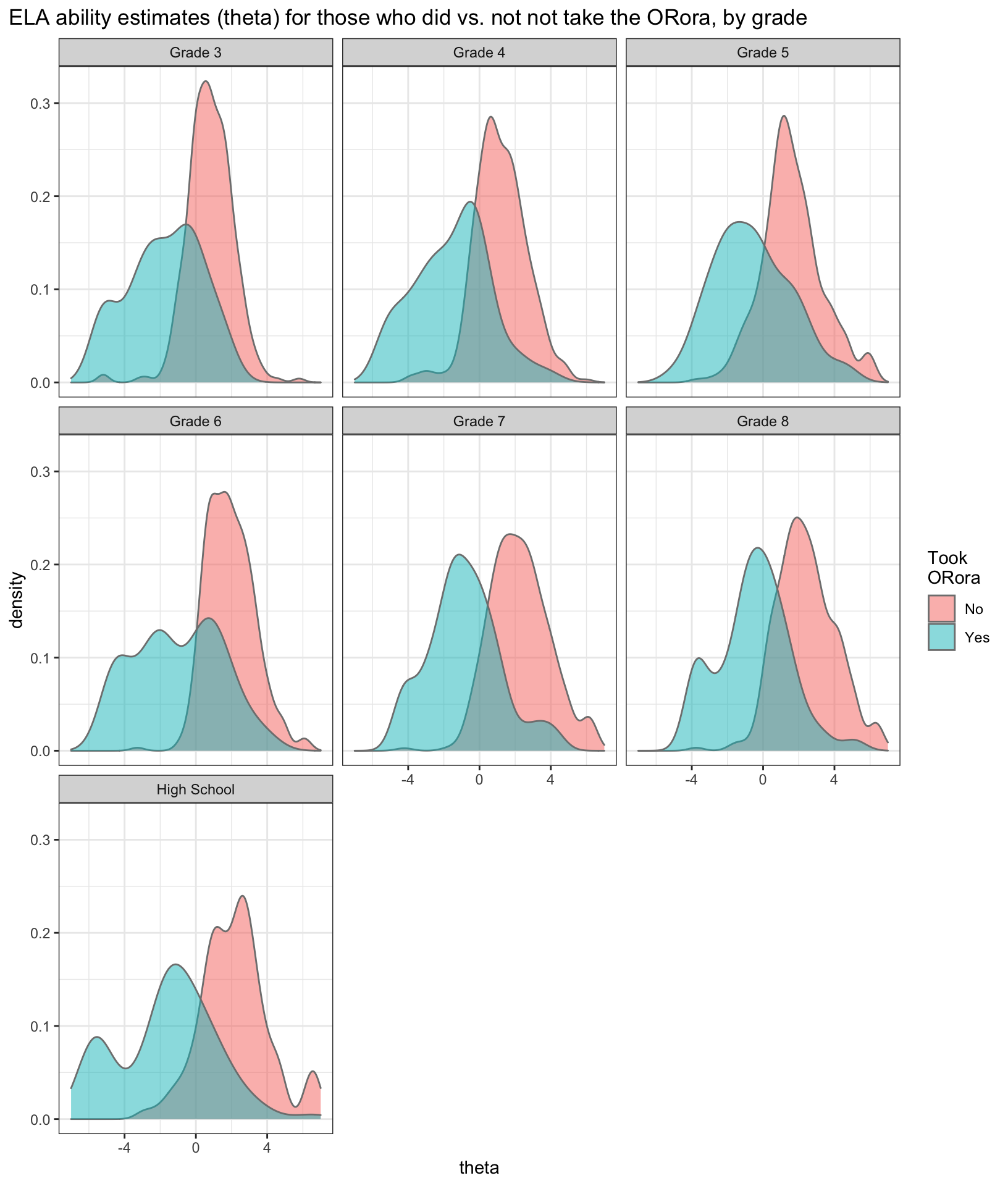

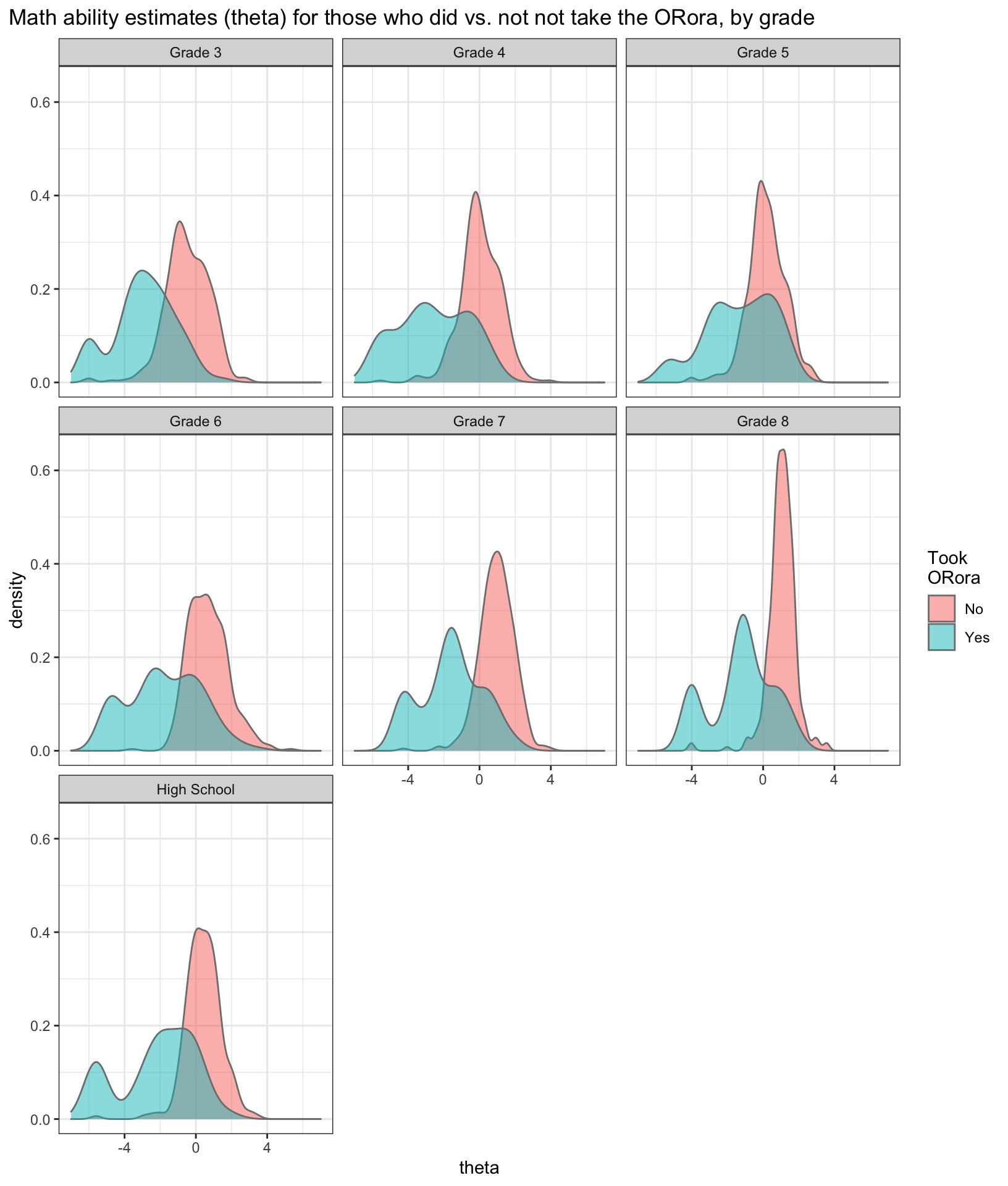

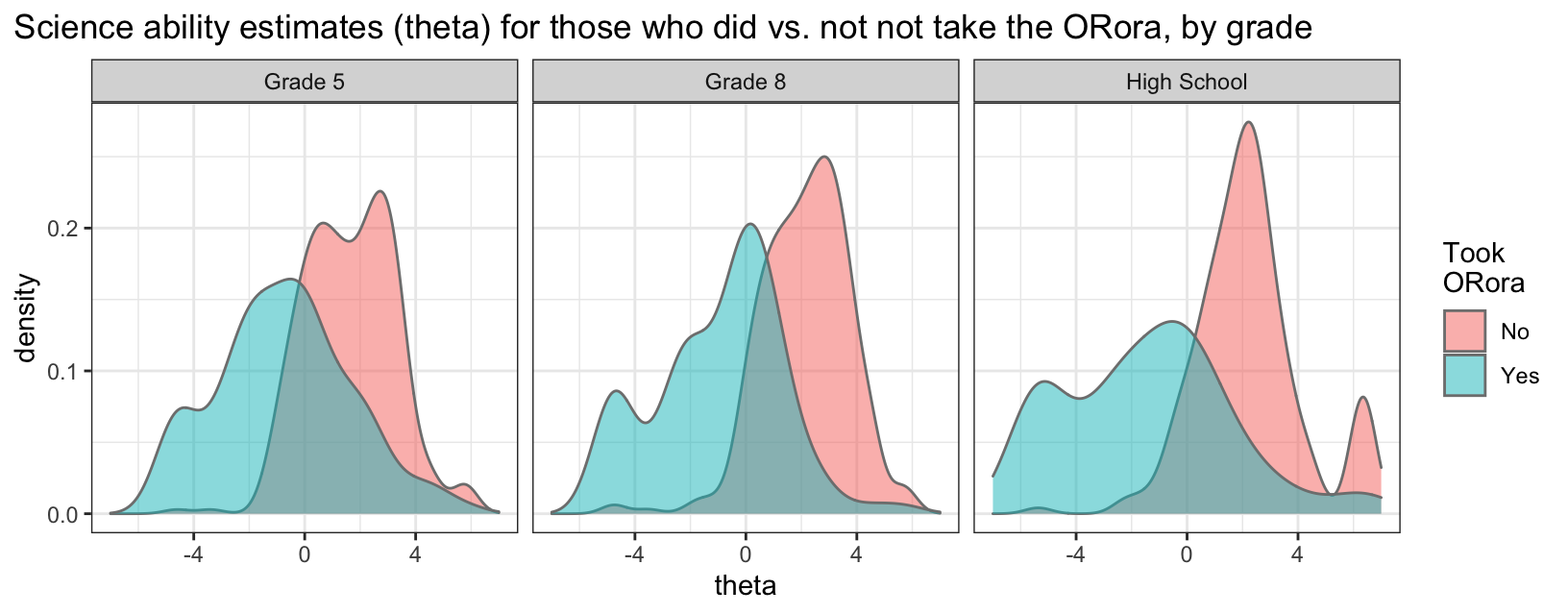

Criterion validity information is difficult to document with AA-AAAS, as most SWSCD do not participate in any standardized assessment outside of the ORExt and/or ORora in Oregon. Divergent validity evidence is garnered via comparisons of ORExt results to ORora outcomes and shows that students whose ORExt assessments are discontinued exhibit serious limitations in attention, basic math skills, and receptive and expressive communication skills. Density distributions show that there are very different measures of central tendency (i.e., means and medians) for all grades and contents, when comparing those who did and did not take ORora.

| ORora | ELA | Math | Science | |

|---|---|---|---|---|

| ORora | 1 | -- | -- | -- |

| ELA | 0.318 | 1 | -- | -- |

| Math | 0.226 | 0.667 | 1 | -- |

| Science | 0.422 | 0.767 | 0.653 | 1 |

Pearson correlations between the total raw scores on the ORExt and the total raw score on the ORora were conducted to address the relationship between total performance on each assessment. The correlation between ELA and ORora scores was 0.318, between Math and ORora scores was 0.226, and between Science and ORora scores was 0.422. As expected, the ORora results provide divergent validity evidence for the ORExt. A strong relationship is not expected between the scores, as students whose ORExt testing is discontinued are generally unable to access the academic content on the ORExt, even with the requisite reductions in depth, breadth, and complexity.

Furthermore, content area correlations other than ORora (i.e., ELA ~ Math, ELA ~ Science, Math ~ Science) are different than these correlation for those who did not take the ORora (See content area correlations below).

Convergent evidence that the ORExt is assessing appropriate academic content is provided by QA and QT responses to the consequential validity survey. Respondents to the survey generally agree that, “The items in the Oregon Extended Assessment accurately reflect the academic content (what the student should know) that my students with significant cognitive disabilities should be learning, as defined by grade level content standards (CCSS/NGSS) and the Essentialized Assessment Frameworks” (85% Strongly Agree or Agree). In addition, they also agreed with the statement that, “The items in the Oregon Extended Assessment, which primarily ask students to match, identify, or recognize academic content, are appropriate behaviors to review to determine what my students with significant cognitive disabilities are able to do” (85% Strongly Agree or Agree). The consequential validity results demonstrate that the ORExt is sampling academic domains that the field of QAs and QTs deem appropriate in the area of academics. See the Consequential Validity Survey Results for complete consequential validity study results.

3.4B Analyses Within and Across Subject Areas

Correlational analyses was conducted to further explore the validity of the ORExt. The purpose of the analysis was described, as well as our anticipated results. Then observed results were discuss before concluding with an overall evaluative judgment of the validity of the test.

Correlational analysis were explore among students’ total scores across subject areas. The purpose of the analysis was to investigate how strongly students’ scores in one area were related to students’ scores in other subject areas. If the correlations were exceedingly high (e.g., above .90), it would indicate that the score a student receives in an individual subject has less to do with the intended construct (i.e., reading) than with factors idiosyncratic to the student. For example, if all subject areas correlated at .95, then it would provide strong evidence that the tests would be measuring a global student-specific construct (i.e., intelligence), and not the individual subject constructs. However, tests would correlate quite strongly given that the same students were assessed multiple times. Therefore, moderately strong correlations (e.g., .70 - .90) would be expected simply because of the within-subject design. Idiosyncratic variance associated with the individual student is thus captured.

3.4C Correlational Analyses Results

Full results of the Pearson’s product-moment correlation analysis by content area and grade level are reported below. The results are significant, yet the overall correlations across content areas suggest that different, though strongly related, constructs are being measured.

| ELA | Math | Reading | Writing | |

|---|---|---|---|---|

| Grade 3 | ||||

| ELA | 1 | |||

| Math | 0.81 | 1 | ||

| Reading | 0.97 | 0.78 | 1 | |

| Writing | 0.91 | 0.75 | 0.81 | 1 |

| Grade 4 | ||||

| ELA | 1 | |||

| Math | 0.82 | 1 | ||

| Reading | 0.96 | 0.8 | 1 | |

| Writing | 0.9 | 0.7 | 0.78 | 1 |

| Grade 6 | ||||

| ELA | 1 | |||

| Math | 0.87 | 1 | ||

| Reading | 0.97 | 0.85 | 1 | |

| Writing | 0.93 | 0.8 | 0.86 | 1 |

| Grade 7 | ||||

| ELA | 1 | |||

| Math | 0.8 | 1 | ||

| Reading | 0.97 | 0.78 | 1 | |

| Writing | 0.93 | 0.75 | 0.85 | 1 |

| ELA | Math | Reading | Science | Writing | |

|---|---|---|---|---|---|

| Grade 5 | |||||

| ELA | 1 | ||||

| Math | 0.77 | 1 | |||

| Reading | 0.97 | 0.74 | 1 | ||

| Science | 0.77 | 0.74 | 0.75 | 1 | |

| Writing | 0.93 | 0.72 | 0.84 | 0.72 | 1 |

| Grade 8 | |||||

| ELA | 1 | ||||

| Math | 0.77 | 1 | |||

| Reading | 0.97 | 0.72 | 1 | ||

| Science | 0.85 | 0.83 | 0.83 | 1 | |

| Writing | 0.93 | 0.71 | 0.85 | 0.78 | 1 |

| High School | |||||

| ELA | 1 | ||||

| Math | 0.88 | 1 | |||

| Reading | 0.97 | 0.86 | 1 | ||

| Science | 0.9 | 0.87 | 0.89 | 1 | |

| Writing | 0.95 | 0.81 | 0.87 | 0.83 | 1 |

Results of the Pearson’s product-moment correlation analysis across domains (i.e., ELA, Science, and Math) ranged from:

- ELA and math: 0.766 to 0.88

- ELA and science: 0.771 to 0.895

- Math and science: 0.741 to 0.866

Across domains, higher scores are certainly correlated, with those scoring higher on any test being likely to score highly on another. However, these correlations are low enough to support that different cognitive domains are being measured.

For ELA and it’s subdomains (i.e., ELA:Reading:Writing), correlations of:

- ELA and reading: 0.965 to 0.973

- ELA and writing: 0.897 to 0.945

- Reading and writing: 0.781 to 0.867.

Within subdomains of ELA, very high correlations are observed. ELA and reading may be so correlated that they are measuring nearly the same information. Reading and writing display lower correlation with one another, though, supporting the assumption that they are measuring unique constructs.